Context Economics

Context Economics and Optimization

Skill 5 of 9 | Pillar II: Knowledge & Context

The economic foundation that transforms production AI from a cost center into a financially sustainable system through sophisticated caching, compression, and optimization.

The Most Expensive Resource in AI

Here's a truth that will determine whether your AI deployment succeeds or fails: every token costs money. Input tokens, output tokens, cached tokens, wasted tokens—they all have a price. And in production systems processing thousands or millions of requests, those costs compound faster than most organizations anticipate.

Skill 5 represents the critical competency for managing this most valuable and expensive resource in agentic AI systems: context. The 2026 AI strategist must become a "context economist," understanding not just how to build systems that work, but how to build systems that work economically.

This isn't about penny-pinching—it's about viability. A customer service chatbot that costs $5,000 per day might be impressive, but it's not sustainable. The same chatbot optimized with proper caching and compression might cost $800 per day while delivering identical quality. That's the difference between a proof-of-concept and a product.

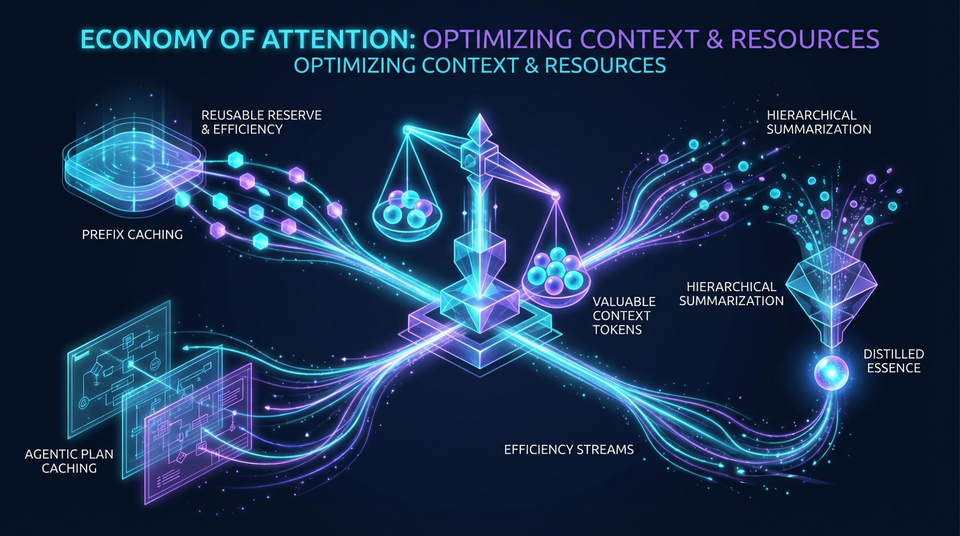

Context economics encompasses three critical areas: leveraging computational reuse through caching, reducing context size through intelligent compression, and caching reasoning patterns for efficiency. Master these, and you've unlocked the economic foundation for production AI.

The Three Sub-Skills of Context Economics

| Sub-Skill | Focus Area | Key Concepts |

|---|---|---|

| 5.1 Prefix Caching | Leveraging computational reuse in inference engines | KV cache, prefix caching, workflow-aware eviction |

| 5.2 Context Compaction | Reducing context size while preserving information | Hierarchical summarization, sliding windows, semantic compression |

| 5.3 Plan Caching | Caching and reusing reasoning structures | Abstract plan caching, plan similarity, dynamic adaptation |

5.1 Prefix Caching and KV Cache Management

The most powerful optimization technique in production AI isn't algorithmic cleverness—it's computational reuse. When an LLM processes a prompt, it computes internal states (Key-Value caches) for each token. If subsequent requests share the same prefix, why recompute what you've already computed?

Understanding the KV Cache

During transformer inference, the model computes Key and Value matrices for each token in the context. These computations are expensive—they're where most of the FLOPS go. The KV cache stores these computed states so they can be reused for subsequent tokens.

Prefix caching extends this concept across requests. When a new request shares the same prefix as a previous one (same system prompt, same RAG context), the cached KV state is loaded, and only the unique suffix needs to be processed.

The benefits are dramatic:

- 50-90% reduction in time-to-first-token (TTFT) for cached prefixes

- Proportional cost savings on input token processing

- Improved throughput through reduced computational load

Cache-Friendly Prompt Design

To maximize prefix caching benefits, prompts must be designed with caching in mind. The key insight: static content goes first, dynamic content goes last.

Optimal Prompt Structure:

[System Prompt - Static, rarely changes]

[RAG Context - Semi-static, changes slowly]

[Conversation History - Dynamic, grows over time]

[Current User Query - Unique to each request]

This structure maximizes cache hit rates because the most stable content is at the prefix (which gets cached) while the variable content is at the suffix (which is processed fresh each time).

Best practices for cache-friendly design:

- Use consistent formatting in static sections—even whitespace changes break cache hits

- Avoid unnecessary variations in system prompts

- Batch similar requests together to increase cache reuse

- Monitor cache hit rates and optimize accordingly

Platform-Specific Caching Implementations

Different LLM providers implement prefix caching with varying capabilities:

Anthropic Prompt Caching:

- Caches prefixes of 1024+ tokens

- 90% cost reduction for cached tokens

- 5-minute TTL (time-to-live)

- Explicit cache breakpoints via API

OpenAI Prompt Caching:

- Automatic caching for eligible prompts

- 50% cost reduction for cached tokens

- Varies by model and request pattern

Gemini Context Caching:

- Explicit cache creation API

- Caches up to 32K tokens

- Hourly storage costs apply

- Manual cache management

Understanding these differences is essential for multi-cloud deployments and cost optimization. The same application might use different strategies on different platforms.

Workflow-Aware Eviction Policies

Standard cache eviction policies like LRU (Least Recently Used) are suboptimal for agentic workflows. Agents often loop back to earlier instructions or follow predictable patterns that LRU doesn't anticipate.

Research like KVFlow demonstrates that analyzing an agent's workflow graph enables smarter eviction policies. By predicting which cached states will be needed soon (based on workflow patterns), you can keep relevant context "warm" and reduce cache misses by 30-50%.

5.2 Context Compaction and Summarization

As agents run for extended periods, conversation histories grow unbounded. Without intervention, context windows fill up, costs skyrocket, and eventually you hit hard limits. Context compaction techniques reduce this growth while preserving essential information.

Hierarchical Summarization Strategies

Instead of maintaining full conversation history, generate summaries at multiple levels of granularity:

Per-Turn Summaries: Brief summary of each user-agent exchange. Captures the essence of what was discussed without verbatim transcripts.

Per-Session Summaries: Summary of an entire conversation session. Useful for long-running interactions that span hours or days.

Per-User Summaries: Long-term profile capturing interaction patterns, preferences, and key information. Persists across sessions.

The system dynamically selects the appropriate level based on the current task. A simple query might use only the per-session summary, while a complex task requiring detailed context would use per-turn summaries for recent turns.

Compression ratios range from 5:1 to 20:1 depending on granularity and content type. A 10,000 token conversation history might compress to 1,000 tokens while retaining all task-relevant information.

Sliding Window with Summarization

This hybrid approach maintains detailed history for recent turns and summarized history for older interactions:

[Summarized History: Turns 1-50] (compressed)

[Detailed History: Turns 51-60] (full fidelity)

[Current Turn: 61]

The window size is a tunable parameter. Larger windows preserve more detail but increase cost. Optimal size depends on task complexity, budget constraints, and quality requirements.

Implementation considerations:

- Trigger summarization when history exceeds threshold

- Use incremental summarization to avoid reprocessing

- Preserve key entities and decisions in summaries

- Include timestamps for temporal reasoning

Semantic Compression Techniques

Beyond simple summarization, semantic compression identifies and removes redundancy while preserving critical information:

Entity Extraction: Identify key entities and preserve them explicitly, discarding verbose descriptions. "The customer from New York who called yesterday about their order" becomes "Customer: John Smith (NYC), Issue: Order #12345"

Coreference Resolution: Replace repeated references with compact representations. "The system then processed the request, and after processing was complete, the system returned the results" becomes "System processed request → returned results"

Information-Theoretic Compression: Use entropy-based methods to identify high-information content. Redundant explanations are dropped; unique, informative content is preserved.

Semantic compression is typically lossy—some information is discarded. The art is designing compression that preserves task-critical information while discarding noise.

5.3 Agentic Plan Caching

A novel optimization technique that caches entire reasoning plans rather than just context. When agents encounter similar requests repeatedly, why regenerate the reasoning from scratch?

Abstract Plan Reuse

Agents often generate similar reasoning plans for similar requests. "Book a flight" and "book a hotel" both follow a common abstract plan: gather requirements → search options → compare alternatives → select best option → confirm booking.

Agentic Plan Caching captures these abstract plan structures and populates them with new variables for similar requests. Instead of reasoning through the entire workflow each time, the agent retrieves a proven plan template and adapts it.

Benefits are substantial:

- 40-60% reduction in latency for routine tasks

- Proportional cost savings on reasoning tokens

- Improved consistency through standardized approaches

- Faster response times for common workflows

Plan Similarity Detection

To leverage cached plans, the system must determine when a new request is similar enough to use a cached plan. This requires:

Plan Embeddings: Encode plans as vectors capturing their semantic structure—not just what they do, but how they approach problems.

Similarity Metrics: Define thresholds for when plans are "similar enough" to reuse. Too strict and you rarely get cache hits; too loose and you apply inappropriate plans.

Efficient Retrieval: Search the plan cache quickly. Vector similarity search enables sub-millisecond retrieval even with thousands of cached plans.

Dynamic Plan Adaptation

Cached plans are abstract templates that must be adapted to specific contexts:

Parameter Substitution: Replace placeholder variables with actual values. "Book {transport} from {origin} to {destination}" becomes "Book flight from NYC to LAX"

Plan Validation: Verify the adapted plan is valid for the current context. A plan for domestic travel might not apply to international bookings.

Correction and Refinement: If validation fails, the agent refines the plan rather than abandoning it entirely. Often small adjustments salvage an otherwise useful cached plan.

Real-World Cost Impact

The economic impact of context optimization is dramatic. Consider these production scenarios:

| Scenario | Naive Cost | Optimized Cost | Savings |

|---|---|---|---|

| Customer Service (10K conversations/day) | $5,000/day | $800/day | 84% |

| Code Generation (50K token contexts) | $0.50/request | $0.05/request | 90% |

| Research Assistant (document analysis) | $2.00/query | $0.30/query | 85% |

| Workflow Automation (1K bookings/day) | $1,000/day | $450/day | 55% |

These aren't theoretical projections—they're achievable through systematic application of prefix caching, context compaction, and plan caching. The customer service chatbot achieves 84% savings through prefix caching (fixed system prompts) and sliding window summarization. The code generation assistant achieves 90% savings through aggressive prefix caching of large codebase contexts.

Annual impact at scale:

- Customer service: $1.5M+ annual savings

- Research assistant: $600K+ annual savings per major deployment

- The difference between "too expensive to deploy" and "profitable product"

The Principle-Based Transformation

From Naive Context Management...

- Sending full conversation history with every request

- No caching—regenerating everything from scratch

- Ignoring context costs until bills arrive

- One-size-fits-all approach across platforms

To Context Economics...

- Understanding caching theory and computational reuse

- Mastering compression and summarization principles

- Applying economic optimization to every context decision

- Platform-aware strategies that maximize value

Transferable Competencies

Mastering context economics builds expertise in:

- Caching Theory: Cache hierarchies, eviction policies, hit rate optimization, cache coherence

- Computational Economics: Cost modeling, resource allocation, optimization under constraints

- Information Theory: Compression, entropy, information preservation, lossy vs. lossless tradeoffs

- Natural Language Processing: Summarization, entity extraction, semantic analysis

- Workflow Analysis: Graph analysis, pattern recognition, predictive modeling

- Performance Engineering: Profiling, bottleneck identification, optimization techniques

Common Pitfalls to Avoid

- Ignoring Caching: Not leveraging platform caching features leaves massive cost savings on the table

- Poor Prompt Structure: Placing dynamic content before static content destroys cache hit rates

- Over-Compression: Aggressive summarization that loses critical information degrades quality

- Static Eviction Policies: Using LRU without considering workflow patterns wastes cache capacity

- No Cost Tracking: Not measuring the economic impact of optimizations—you can't improve what you don't measure

- Premature Optimization: Optimizing before understanding actual usage patterns leads to wrong priorities

- Cache Invalidation Failures: Not properly invalidating stale cached content causes subtle bugs

- Ignoring Platform Differences: Not adapting strategies to platform-specific caching implementations

Implementation Guidance

For Architects: Design prompt structures for maximum cache reuse from day one. Choose appropriate platform caching features. Define context budgets and optimization objectives. Establish cost and performance monitoring.

For Developers: Implement cache-friendly prompt templates. Build hierarchical summarization pipelines. Create sliding window context management. Add cost tracking to every LLM call.

For Operations: Monitor cache hit rates and optimization opportunities. Track context costs per request and per user. Analyze workflow patterns for cache optimization. Tune cache TTLs and eviction policies.

Looking Forward

The field is evolving toward:

- Learned Compression: ML models that learn optimal compression strategies for specific tasks

- Predictive Caching: Anticipating future context needs and pre-caching proactively

- Cross-Request Optimization: Sharing and reusing context across different users and sessions

- Hardware-Aware Caching: Optimizing for specific GPU architectures and memory hierarchies

- Semantic Caching: Caching based on semantic similarity rather than exact token matches

Next Skill: Data Governance — Ensuring AI systems are grounded in trustworthy, governed data.

Back to: The Nine Skills Framework | Learn

Subscribe to the Newsletter → for weekly insights on building production-ready AI systems.